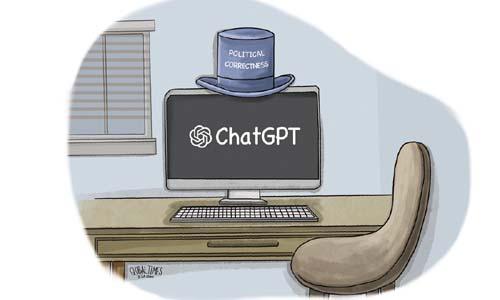

AI has a political stance, associated with ideology

Ding Gang

I don’t believe ChatGPT is a “knowledge bot” without a political stance. Some people claim that all its answers are neutral, or tend to be neutral, and therefore the AI chatbot is balanced and perfect.

A neutral answer to questions about social sciences, such as political, cultural, and philosophical issues, is sleek, or to put it mildly, impossible. This is because answering or analyzing questions about such disciplines requires a certain amount of personal, political emotion and stance to begin with.

ChatGPT’s biggest flaw is that it has no emotions. For example, it cannot write the emotional and political commentary of the time that Dickens wrote at the beginning of A Tale of Two Cities: “It was the best of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness.”

Nor can it be expected to write a classic novel complete enough to influence the human reading experience and spiritual world, as Dickens did.

Of course, this does not mean that it will learn to have feelings or political positions in the future as AI technology develops. This will depend on how, or with what kind of knowledge, experience and culture, humans will nurture it.

It’s a bit premature to discuss this issue when we only have two versions of ChatGPT. After all, humans invented it mainly to help people do something or to get basic knowledge, not to have political standards like humans or be able to love, hate, feel pain, sadness, empathy, and joy.

Strictly speaking, it is a student, an outstanding student. AI technology does have the ability to make its own choices. But its options will depend on its sources of knowledge, what kind of knowledge or experience, and positions and perspectives humans “load” into its “inventory.”

Because of this, its answers to questions of a political or different ideological character are never neutral. It can only pick and choose according to its knowledge base.

When ChatGPT was asked to comment on former president Donald Trump, it is said to have politely declined to do so. When asked to comment on the current President, Joe Biden, it used the word “terrific” and called him a very impressive president.

On the future of China, it used tendentious concepts such as that China “could challenge the world order” in the future, which clearly “mimics” the representations of Western governments and media reports about China. This concept is unacceptable to China and does not exist in China’s “knowledge base” about its future development. Indeed, China in fact is not such a challenger.

The emergence of such a politically charged concept reflects the West’s own perception of China. ChatGPT has chosen this formulation because it has such a basic concept in its knowledge base, which is in line with mainstream Western opinion and understanding.

This is the point I have emphasized in several reviews: There are limitations to the concept of politics in the existing world social science system. This system has a great deal of theoretical and historical cognition shaped by the Western experience and is also strongly tinged with the emotional experience of Westerners. Non-Western experiences and perceptions remain non-mainstream, or even peripheral.

However, the world social science system is not static and will evolve as more non-Western countries develop. Therefore, ChatGPT faces the same challenge as the human social knowledge system: Applying Western experiences and theories to explain or judge the development of non-Western countries is insufficient and sometimes dangerous.

Understanding AI from this perspective means seeing it is no longer purely technological competition but has been and will be more closely associated with ideology.

The writer is a senior editor with People’s Daily, and currently a senior fellow with the Chongyang Institute for Financial Studies at Renmin University of China